Can AI Invent Independently? How will it impact innovation?

Hello, my name is Ojaswita. I have been with PatSnap for almost three years now. In these past few years, I’ve seen the full innovation cycle across our product suite and had the privilege of speaking to hundreds of customers about our tools. Throughout my discussions, there has always been a common thread of how artificial intelligence might impact innovation in the future. The following are my opinions on AI’s ability to innovate with its current capabilities.

In recent years AI has become the “it word” for every product. In some way, every new product has some form of AI integration. Most recently, a new question has arisen: Will AI take over my job? (Which might not be the most innovative question in retrospect as I’ve always assumed robots would take over the world.) Now, while we know that automation is the nature of machine evolution, we have yet to ascertain how much AI can replace the human impact of innovation and IP.

Before looking at how AI impacts innovation in IP, let’s look at how AI is already integrated into the patent world. The data processing power and speed of current machine algorithms allow AI to run prior art or infringement searches, evaluate the validity of invention disclosures, and automatically suggest claim language for new patent drafts. On a more manual level, we are also able to apply valuation metrics to patent documents, extrapolate categorization and classification metrics and determine potential licensing capacity. These current applications summarize, to me, that AI is very good at identifying patterns and extrapolating insights based on established patterns.

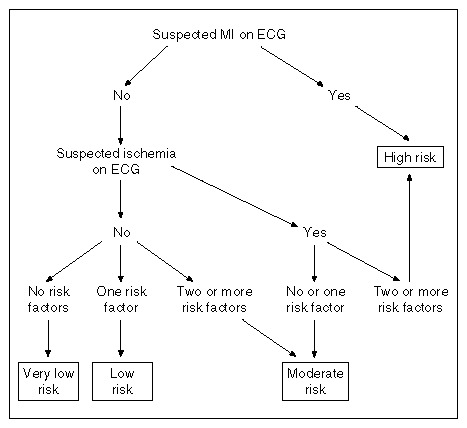

But how does that build up to the AI’s ability to functionally innovate for new products or tools? In the life science space, I see the integration of AI slowly creeping into hospitals and drug intelligence. The early days of pattern recognition and application in hospitals were seen in the Goldman Algorithm. (This was not done by AI but did result in a computerized mechanism eventually.) Cook County Hospital, in Chicago, started using the Goldman Algorithm to evaluate the risk of patients with acute chest pain in the late 1990’s. This established method of pattern recognition allowed the hospital to categorize and assess patient needs and lower the misdirection of hospital resources more accurately. The automated format of pattern recognition has shown a 70% higher success rate in patient diagnosis.

Fig 1. Goldman Algorithm used by Cook County

Going forward, with the application of AI, I see similar methods of pattern recognition in patient risk assessment that can be used by medical professionals to gauge a patient's health trajectory based on raw data on lifestyle patterns. With a better assessment of an individual patient's genetic disposition, and lifestyle patterns, researchers can use large databanks of clinical trial data, patient case studies, and AI sequencing techniques to build individualized medical treatments for patients.

The current use cases of AI-driven algorithms seemingly insinuate that AI could eventually innovate new methods of problem-solving for human problems. However, we have not been using AI long enough to have fully understood the limits of AI’s ability to create a distinction between verified and unverified data. The current research process, regardless of industry, requires a reiterative method of study to be practiced. For data to be validated, scientists must have a process that can be repeated and bring about similar conclusive results with each repetition. While AI has good pattern recognition capacity, the information educating the system now is peer reviewed therefore, we know that the information is to some degree “correct” by current data. However, eventually, the output of data the AI will use to expand its algorithm of understanding will be built off the data produced by the AI itself, not additional data being inputted from a third-party source.

The more the AI relies on its own insight rather than third party verified guidance, the worse the output of the AI will become. The best example of this, in my understanding, is AI art generation. In the first few iterations of art generation, the AI pulls from external sources as references of what the product should be. However, after a point, the AI is pulling from the frame of reference of art it has created rather than a verified source of reference. At this point, the quality of art becomes more abstract and a viewer who is unaware of the input would not accurately understand what the intended output of the system is meant to be. (I guess AI can’t replace the artistic mastery of Bob Ross…. yet?)

My concluding opinion is, AI will never be able to fully support long-term innovation. The introduction of machine-generated content will limit the extrapolation capabilities of AI as it exists today. Rather AI is a tool that can be best used where there are obvious advantages to automation to generate more time for human innovation to continuously feed into the AI’s knowledge bank. (Or maybe AI is the first of its kind in a barren world looking for it’s perfect robot partner to enhance its capacity just like Wall-E and Eve.)

Comments

0 comments

Please sign in to leave a comment.